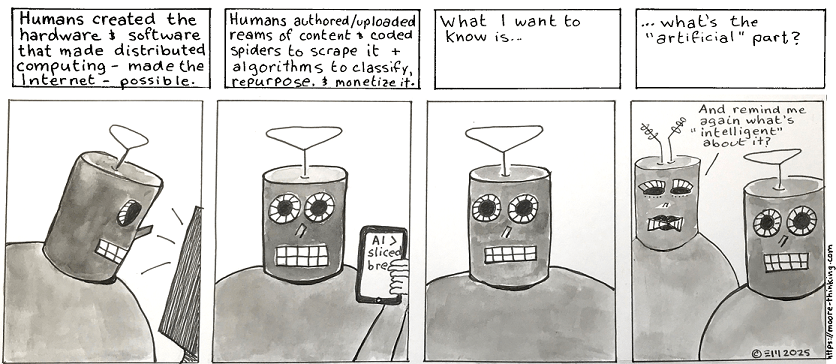

Artificial intelligence in the form of chatbots—software programs that attempt to simulate back-and-forth conversational “chats” with human users—have officially made it to the mainstream.

Chatbots such as ChatGPT, Claude, Gemini, Copilot, and Perplexity make generating text, image, audio, and video content so easy and so inexpensive that AI-generated content is beginning to flood social media platforms such as Facebook. And while AI’s application to date has primarily been around entertainment (think cat videos) and advertising, surprisingly it’s also being used by some as a low-cost alternative to talk therapy.

With all the buzz, it was perhaps inevitable that some folks would begin trying to determine if this technology could be used for education or training and, if so, how. This post was designed to help you make that determination.

The value-add chatbots are SUPPOSED TO offer education and training

Ask a chatbot such as Google’s Gemini or Microsoft’s Copilot (for example, by typing the question “How could chatbots be applied effectively to education and training?” into Google Search or MSN Search) and you’ll likely receive a generic list of pie-in-the-sky benefits such as this one:

- Automate tasks

- Enhance engagement

- Improve learning outcomes

- Make education more accessible

- Provide personalized learning experiences

- Offer tutoring

Well, duh. Could the above list possibly be any more obvious….or more useless?

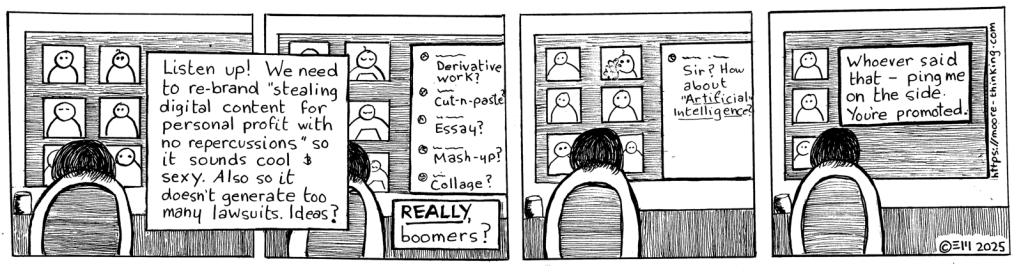

Like politicians and high school students assigned an essay (apologies to any conscientious politicians and high school students reading this), at current state chatbots are great at skirting direct questions and producing bland, obvious, tangentially related generalizations in lieu of relevant, considered specifics.

Still, they do show promise in certain instructional situations.

How chatbots might ACTUALLY add value to education and training

Rather than trying to figure out what AI has to offer and then see if we can shoehorn that offering into our instructional design and development processes, it’s more useful to flip the question around. In other words, it’s more useful to begin with what we want—the gaps we want to fill or the goals we want to reach—and then investigate the extent to which AI might be able to help.

Gap#1: Students using AI to cheat cost instructors time and hassle.

Potential AI value-add: USE An AI content detection tool.

Students can access chatbots just like anyone else—and have been doing just that so frequently that AI-generated homework assignments have become a problem. Of course, most seasoned teachers can spot a fake a mile away, whether that fake was purchased, plagiarized, or AI-generated. But cheat-detection software has been widely available for awhile now and comes with the added benefit of demonstrating—both to the cheaters and, if comes to that, to parents and administrators—the percentage of a given assignment that wasn’t a student’s original work and even the actual source(s) of non-student work.

Not surprisingly, the latest versions of widely available cheat-detection software such as TurnItIn are already being enhanced to support the detection of AI-generated material.

Gap #2: Many teachers/trainers tasked with creating high-quality instructional materials often have neither the time nor the professional training required to do so.

Potential AI value-add: Use an AI chatbot to speed the creation and development of instructional materials.

Using chatbots in the following ways can potentially speed up the process of creating learner-facing instructional materials while keeping currency, relevance, authority, accuracy, and purpose (the five characteristics of high-quality text) firmly in the hands of instructional content creators.

- Use chatbots to generate ideas. Asking a chatbot to suggest an analogy, describe, or explain a topic may give us ideas we can use—or spark ideas of our own.

- Use chatbots to generate quick rough drafts. Asking a chatbot to produce summaries, step-by-step procedures, instructional images, video scripts, slide deck content, assessment questions, or rubrics for a given topic can provide us with an astonishingly fleshed-out a starting point. We’ll still need to question, edit, and expand that starting point, but we will have saved time and perhaps identified organizational approaches we otherwise wouldn’t have thought of.

- Use chatbots to identify ways to edit and improve materials. Some chatbots, such as Claude, allow the uploading of documents that can then be analyzed and incorporated into responses. If a chatbot can’t successfully summarize or produce a list of assessment questions based on a document we’ve uploaded, that indicates we probably need to edit the document for structure, clarity, consistency, and completeness.

Goal #1: Sufficient, appropriate, and affordable enrichment and remediation resources.

Potential value-add: UsE AI chatbots to provide students with self-service tutoring.

In a perfect world, every teacher and trainer would show up to class not just with a prepared lecture, activities, and assessments designed for audiences sitting in the middle of that storied bell curve, but also remedial materials to backfill learners on the left side of the curve and enrichment materials to extend the knowledge and skills of learners on the far right of the curve. In the real world, of course, that’s often not possible. Instructional materials tend to be one-size-fits-all (skewed, if anything, to the slowest learners in the room) and the result is that we struggle to maximize the instructional experiences of both under-performing and over-performing learners. AI tutoring chatbots such as those produced by Kahn Academy, Carnegie Learning, and McGraw Hill (all organizations with a history of producing interactive educational content) are too sophisticated and expensive for most of us to create in-house, but could be useful third-party supplemental tools depending on our subject and audience.

The downside to using AI

AI chatbots come with quite a few negatives.

- Chatbot answers aren’t tied to any authority or accountability, which makes them inappropriate for many instruction-related uses (including the unedited production of learner-facing materials).

- Chatbots are best at producing bland, generic information on basic topics—content that’s readily available from many other sources (and that typically isn’t the content instructors are after).

- It can take us quite awhile to learn how to ask chatbots questions in a way that produces useful results.

- Every time we interact with a chatbot, we’re providing valuable content and testing services to the chatbot creators free of charge. And because we need to assume that everything we type into a chatbot is consumed by the chatbot (and, presumably, sold or otherwise shared), we need to be mindful of privacy issues around learner data as well as organization internal data.

The bottom line

Despite its rather obvious negatives, AI is often seen as a silver bullet—especially in time-, expertise-, and cash-strapped contexts like U.S. public education.

But here’s the thing. If you’ve been in the instructional game for any length of time, you’re likely used to hearing “Here’s a new piece of software that will make your life easier as soon as you figure out how to pay for and use it.” In a small percentage of cases (Word processing applications, WYSIWYG HTML editors, and cheap, easy-to-use video capture and editing tools) these claims were true.

But far more often, they weren’t.

Cat videos, advertisements, and psychotherapy aside, only time will tell whether AI will be able to deliver measurable, real-world value in instructional contexts.

What’s YOUR opinion?

Do you use AI in the classroom or training room? What benefits has it brought you and your learners? What reservations, if any, do you have regarding its use? Please consider leaving a comment and sharing your hard-won experience with the learning community.

Leave a comment